A/B testing is a simple, data-driven way to improve your email campaigns. It works by sending two versions of an email - each with one small difference (like a subject line or button) - to separate groups of subscribers. The version that performs better is sent to the rest of your audience.

Here’s why it matters:

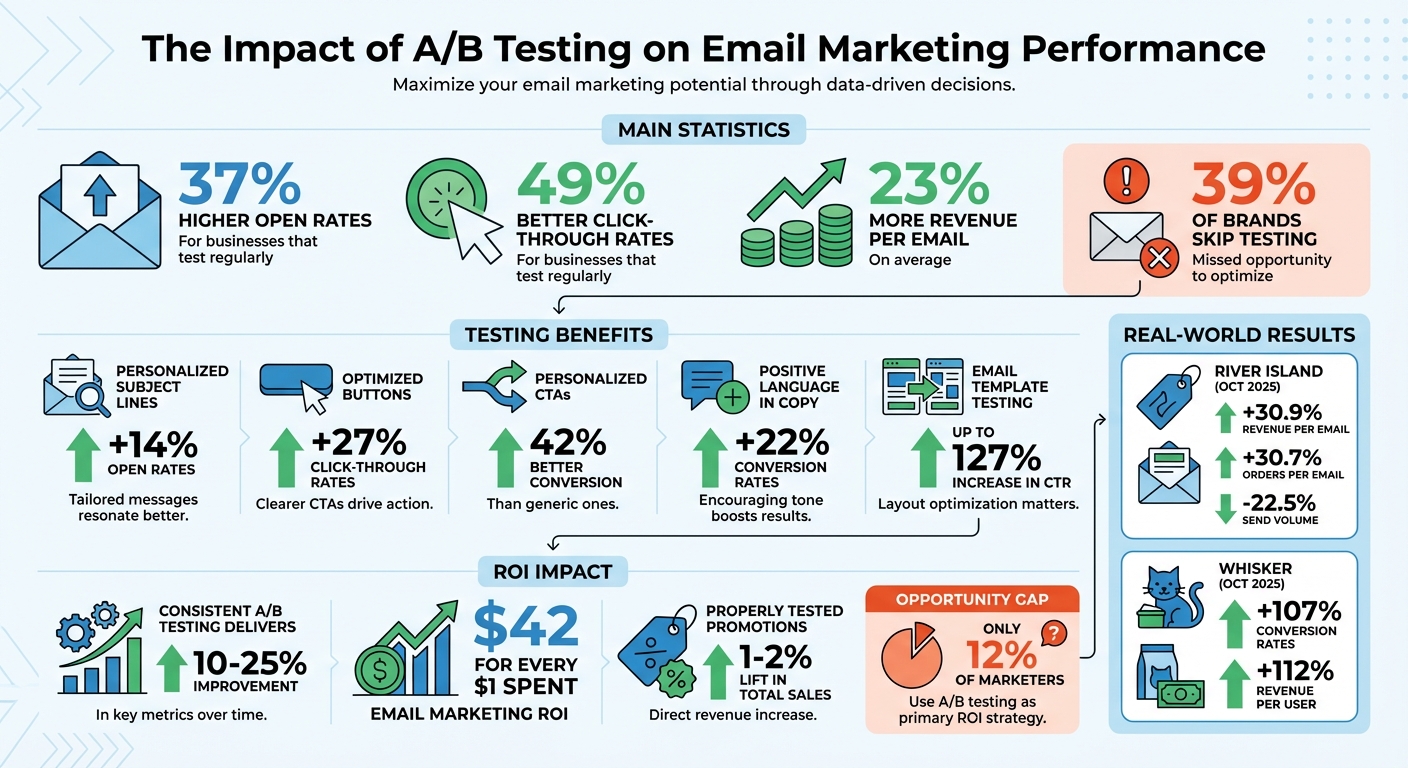

- 37% higher open rates and 49% better click-through rates for businesses that test regularly.

- 23% more revenue per email on average.

- Personalized subject lines can boost open rates by over 14%, and optimized buttons can increase clicks by 27%.

Despite these benefits, 39% of brands skip testing, leaving growth potential untapped. Testing elements like subject lines, call-to-action buttons, and email layout helps you understand what works for your audience, leading to better engagement and higher conversions.

Start small: test one element at a time, use clear hypotheses, and analyze your results. Over time, these insights will refine your strategy and maximize your email marketing ROI.

A/B Testing Email Marketing Statistics and Impact

What Is AB Testing in Email Marketing? Complete Tutorial 2025

sbb-itb-645e3f7

Benefits of A/B Testing in Email Marketing

A/B testing transforms email marketing from guesswork into a precise, data-driven approach. It allows marketers to make informed decisions based on real user behavior, not assumptions.

Better Engagement Metrics

Testing individual email elements helps pinpoint what resonates with your audience. Small changes - like tweaking a subject line, replacing a text link with a button, or using a personalized sender name - can lead to measurable improvements. For instance, personalized subject lines can increase open rates by over 14%, while click-through rates can jump by 27%. These seemingly minor adjustments can build up to major performance gains over time.

A great example of this is fashion retailer River Island. In October 2025, they shifted from relying on assumptions to systematically testing their email cadence and content types. The result? A 30.9% boost in revenue per email and a 30.7% rise in orders per email - all while reducing their total send volume by 22.5%.

These kinds of engagement wins highlight how data-backed decisions can shape broader marketing strategies.

Data-Driven Decisions

Every A/B test generates valuable insights about your audience, helping you move beyond generic benchmarks. As Camila Espinal, Email Marketing Manager at Validity, puts it:

"It gives you the data you need so you can step away from taking a shot in the dark and use real information to sharpen your campaigns, improve engagement, and connect with your audience."

Surprisingly, only 12% of marketers rely on A/B testing as a primary strategy for ROI. This means that brands that consistently test and refine their campaigns can gain a clear advantage over competitors.

Armed with these insights, marketers can fine-tune the entire customer journey to drive better results.

Higher Conversion Rates

The impact of A/B testing extends far beyond open rates - it’s a tool for optimizing the entire customer experience. For example, personalized calls-to-action convert 42% better than generic ones, and using positive language in email copy can boost conversion rates by 22% . Even testing different email templates has been shown to increase click-through rates by as much as 127%.

Pet care brand Whisker demonstrated this in October 2025 by testing personalized messaging throughout their customer journey. By aligning email content with specific website variations, they achieved a 107% increase in conversion rates and a 112% rise in revenue per user. This shows how small, data-driven adjustments can lead to significant results.

Consistent A/B testing typically delivers a 10–25% improvement in key metrics. Given that email marketing averages an ROI of $42 for every $1 spent, even modest gains can have a big financial impact. Properly tested promotions can also drive a 1–2% lift in total sales, which adds up when applied across multiple campaigns throughout the year.

Email Elements to Test

When it comes to email marketing, three elements stand out as essential testing areas: subject lines, call-to-action buttons, and email content and layout. Each plays a unique role in moving subscribers from opening your email to taking the desired action.

Subject Lines

The subject line is the first impression your email makes, and it largely determines whether it gets opened or ignored. Testing different approaches can reveal what resonates most with your audience. For example, subject line length matters - keeping it between 41 and 70 characters is often effective, though shorter lines (under 50 characters) are better suited for mobile users to avoid truncation.

Personalization is another powerful factor. Including a subscriber's name in the subject line can boost open rates by over 14%. Even tweaking the sender name can make a difference - using "Alex from [Company]" instead of just a company name has been shown to increase open rates by 0.53% and click-through rates by 0.23%.

Tone and urgency also play a role. Phrases like "Ends tonight" can create a sense of urgency, but their effectiveness depends on your audience. As Alex Killingsworth, an Email & Content Marketing Strategist at Online Optimism, notes:

"A single A/B test provides valuable insights, but the real magic happens when you continuously optimize your subject lines based on repeated testing".

You can also experiment with questions versus statements, positive versus negative framing, and the use of emojis. Interestingly, CoSchedule found that direct statements outperformed questions in open rates.

Once your subject lines grab attention, the focus shifts to driving action through your call-to-action buttons.

Call-to-Action Buttons

After engaging your audience with a strong subject line, the next step is to encourage action with an optimized call-to-action (CTA) button. Testing elements like format, copy, color, and placement can significantly impact performance. For instance, using buttons instead of text links can increase click-through rates by 27%. However, some newsletters, such as SitePoint's, have found success with simple text links.

The wording of your CTA button is especially important. Specific, benefit-driven phrases like "Get the formulas" often outperform generic ones like "Click Here" or "Read More." Campaign Monitor reported a 127% increase in click-through rates after improving CTA copy, and personalized CTAs have been shown to convert 42% better than generic ones.

Design and placement are equally crucial. High-contrast colors make buttons stand out, while strategic placement - such as above the fold in shorter emails or multiple CTAs in longer ones - can guide users effectively. Twilio SendGrid emphasizes:

"Your call to action is the strongest, most important piece of content you will ever include in your email".

Testing different button sizes, shapes, and urgency-driven phrases like "Ends Tonight" can help you identify what drives the best results.

Email Content and Layout

Once you’ve refined your subject lines and CTAs, it’s time to focus on the email’s content and layout to keep subscribers engaged. Testing copy length is a great starting point. While some audiences prefer concise, punchy messages, others may respond better to detailed, informative content. Morgan Brown from GrowthHackers advises:

"Cut the length of your email copy in half. Now cut it in half again".

With the average attention span now around eight seconds, brevity often works best.

Formatting also plays a big role in readability. Bulleted lists can make content easier to scan compared to dense paragraphs. Positive language has been shown to increase conversion rates by 22%, and experimenting with tone—whether conversational or formal—can help you strike the right chord, often aided by AI based copywriting tools. Adjusting white space and padding can further improve readability.

Visuals and layout are equally important. While the brain processes visuals 60,000 times faster than text, overly graphic-heavy emails don’t always outperform simpler designs. Testing single-column versus multi-column formats and experimenting with hero image placement can yield insights. In some cases, even removing images altogether can be effective. Always include alt text for images in case they fail to load, and ensure your design is mobile-friendly. Details like font size and clickable buttons are crucial for a seamless mobile experience.

How to Set Up A/B Tests for Email Campaigns

A/B testing is a powerful way to refine your email campaigns, but it requires careful planning and execution to yield meaningful results. Luckily, most email service providers like HubSpot, Salesforce Marketing Cloud, and Mailchimp include built-in A/B testing tools. Here’s how to set up your tests step by step.

Step 1: Define Your Hypothesis

Start by crafting a clear "if/then" statement to guide your test. For instance: "If we use a personalized subject line, then open rates will increase because it stands out in the inbox". This provides a clear focus and makes interpreting results easier.

Camila Espinal, Email Marketing Manager at Validity, stresses the importance of keeping track:

"Log the hypothesis, outcome, and insight for every test. Otherwise, you'll just repeat your mistakes".

Once your hypothesis is ready, the next step is to decide what element you’ll test.

Step 2: Choose One Variable to Test

Focus on just one element at a time - like the subject line, CTA button color, or email layout. Testing multiple elements in one go makes it impossible to pinpoint which change led to the results. For example, if you test both the subject line and the CTA button and see improved click-through rates, you won’t know which change was responsible. Sticking to one variable ensures clarity in your results.

Step 3: Determine Sample Size and Split Your List

The reliability of your results depends on your sample size. Ideally, test each version on at least 20,000 recipients for metrics like open and click-through rates. For smaller lists, aim for at least 1,000 subscribers per variant. As Camila Espinal notes:

"With only a few hundred contacts, the results won't reach statistical significance, so the outcome is unreliable. You should aim for 10,000 people or more on a test if you can".

Use your email platform’s randomization tools to split your list evenly. This prevents biases from factors like location or purchase history. Tools like Evan Miller's Sample Size Calculator can also help you determine the exact number of recipients needed based on your baseline conversion rate and desired improvement.

| Deployment Method | How It Works | Best For | Risk Level |

|---|---|---|---|

| Sample/Winner (10/10/80) | Test on 10-20% of the list; send the winner to the rest | Non-urgent campaigns where maximizing results is key | Medium (Conditions may shift over time) |

| 50/50 Split | Divide the list equally and send both versions simultaneously | Quick tests or low-risk scenarios | High (Half the list gets the weaker version) |

| Hybrid (Test + Control) | Send control to the full list and test variants to smaller groups | Revenue-focused campaigns where caution is key | Low (Minimizes risk to revenue) |

Once your list is divided and sample size set, it’s time to launch the test.

Step 4: Launch and Monitor the Test

Send both versions at the same time to avoid time-based biases. For reliable results, allow 24-48 hours for open rates and 4-5 days for click-through and conversion rates to stabilize. While 85% of email results often occur within the first 24 hours, some subscribers may check their emails later. Declaring a winner too soon could lead to inaccurate conclusions.

Track metrics that align with what you’re testing. For example:

- Subject line tests focus on open rates.

- CTA button tests measure click-through rates.

- Offers or pricing tests track conversion rates and revenue per email.

Most email platforms offer real-time dashboards, but for deeper insights - like how emails perform across different clients - consider tools like Litmus Email Analytics.

Step 5: Analyze and Apply A/B Test Results

Before declaring a winner, ensure your results meet a 95% confidence level (p < 0.05). This ensures the differences weren’t just random. If results seem off, it’s worth rerunning the test.

Once you’ve identified a winner, document everything in an A/B test log, including the original hypothesis, the winning variant, and key takeaways. This creates a valuable resource for future campaigns and helps avoid repeating unsuccessful tests. Apply the winning element to your next campaigns, but remember: audience preferences can change. Retest periodically to stay ahead.

Best Practices for Continuous A/B Testing

Once you've set up your initial A/B tests, the next step is to embrace continuous testing. This approach fine-tunes your campaigns over time and builds a wealth of knowledge you can rely on. The best email marketers see it as an ongoing process that drives sustained success. Here’s how you can make it work.

Test One Element at a Time

Stick to testing one element at a time to clearly understand what’s driving the results. If you change multiple elements - like a subject line and a CTA button - in the same test, you won’t know which one caused the improvement. For example, if your click-through rate spikes, was it the new subject line or the redesigned button? Without isolating variables, you’re left guessing.

Focusing on one change per test ensures the results are actionable and helps you avoid basing future decisions on incorrect assumptions. Over time, this method reinforces best practices and builds a stronger foundation for future campaigns.

Use Consistent Metrics

Switching metrics from test to test makes it nearly impossible to track progress or spot trends. If one test measures open rates, another focuses on conversions, and yet another looks at revenue, you’ll struggle to draw meaningful comparisons.

Instead, choose metrics that align with your goals and stick with them. For example:

- If engagement is your focus, track open rates and click-through rates consistently.

- If driving revenue is the priority, measure conversion rates and revenue per email.

By using consistent metrics, each test builds on the last, creating a clear path for continuous improvement.

Iterate and Improve

Each test should inform the next. Keep a detailed record of your results to guide future strategies and avoid repeating past mistakes. This ongoing documentation helps you see what resonates with your audience and adapt as their preferences evolve.

Rob Gaer, Senior Software Engineer at Miro, emphasizes the value of A/B testing:

"Email marketing A/B testing has so many benefits, such as solving user problems and improving UX, driving growth and business impact, optimizing content for diverse audience segments as well as gaining insight and learnings you can apply to future campaigns".

Regularly revisit and retest successful elements to ensure they still align with your audience’s changing needs. By doing so, you’ll keep your campaigns fresh and effective over time.

Conclusion

A/B testing takes the uncertainty out of email marketing and turns it into a system powered by data. Instead of guessing what might work, you gain clear insights into what motivates your audience to open emails, click links, and take action. It shifts your strategy from relying on gut feelings to making decisions based on solid evidence.

And the numbers back it up. Research consistently shows that structured testing, much like ad creative testing, can dramatically improve campaign results. These aren’t just small tweaks - they often mark the difference between campaigns that barely cover costs and those that drive substantial revenue.

The real magic lies in continuous testing. With every experiment, you uncover new insights about your audience. Over time, these small but consistent improvements - like refining a subject line or perfecting a call-to-action - add up. The result? Campaigns that perform far better than where you started.

Getting started doesn’t have to be overwhelming. Focus on one impactful element, such as your subject line or call-to-action, and test it with a clear hypothesis. Use what you learn to refine your approach. With each test, you’ll not only see better engagement but also gain an edge over competitors. The brands that embrace this process don’t just improve - they create a lasting advantage that grows stronger with every experiment.

FAQs

How do I pick the best thing to A/B test first?

To understand what works best in your email campaigns, start by testing one variable at a time. This approach helps you pinpoint what’s driving changes in performance. Some great starting points include:

- Subject lines: These can influence open rates dramatically.

- Send times: Timing can determine whether your email gets noticed or buried.

- Content: The tone, structure, or visuals may affect engagement.

- Offers: Different promotions might resonate more with your audience.

- CTAs (Calls to Action): The wording, placement, or design can impact click-through rates.

Focus on areas that align with your current goals. For example, if open rates are low, experiment with subject lines or send times. If the issue lies with click-through rates, prioritize testing content or CTAs. By targeting the elements that matter most, you can make meaningful improvements.

How big should my test groups be for reliable results?

To get trustworthy A/B testing results, it's crucial to have enough participants in each group to ensure statistical significance. Ideally, each group should include at least 1,500 recipients, meaning a total of 3,000 participants across both groups.

If your audience is smaller - fewer than 30,000 people - testing might not yield reliable insights. For mid-sized audiences, between 30,000 and 50,000, allocate 10% of your list to each group. For larger lists exceeding 50,000 recipients, testing with 5% per group should provide accurate outcomes.

When should I stop a test and choose a winner?

When running an A/B test, stop it only when you have enough data to confidently determine which variation performs better. This usually involves waiting until you reach statistical significance or achieve specific success metrics, like noticeable differences in open rates or click-through rates. Make sure the test runs for a sufficient period - often several days to a week - to account for typical audience engagement patterns. This ensures your results are reliable and based on real user behavior.